Inside the CPU: Breaking Down the Brain of the Computer

Have you ever wondered what happens when you click a link or run a program?

___________________________________________________________________________________

At the heart of every computer is the CPU (Central Processing Unit) – often called the “brain” of the machine. The CPU coordinates everything: it fetches instructions from memory, decodes them, does the calculations, and then stores the results. Think of the CPU as a busy kitchen in a restaurant: the control unit is the chef telling everyone what to do, the ALU (Arithmetic Logic Unit) is the cook doing the actual chopping and cooking (math and logic), and the registers are little bowls where ingredients (data) are kept handy on the counter en.wikipedia.org geeksforgeeks.org. (Later we’ll mention recipes for learning more – see the “Learn More” section for books, kits, and courses.) To visualize this, imagine a detailed block diagram of a CPU showing the control unit, ALU, registers, and buses (data connections).___________________________________________________________________________________

Key Components: Control Unit, ALU, and Registers

Inside the CPU, the Control Unit (CU) acts like an orchestra conductor. It directs the flow of data and instructions, deciding when to fetch the next instruction and which parts of the CPU should be active. According to computer architecture references, “the control unit (CU) is a component of a CPU that directs the operation of the processor. It uses coded instructions to generate the timing and control signals that direct the ALU, memory, and I/O devices” en.wikipedia.org. In other words, the control unit watches an instruction, breaks it into pieces, and tells the rest of the CPU what to do next.

Meanwhile, the Arithmetic Logic Unit (ALU) is the CPU’s “math brain” – it does all the arithmetic (addition, subtraction, multiplication, division) and logic operations (AND, OR, comparisons) on binary numbers. As Wikipedia explains, “an arithmetic logic unit (ALU) is a … circuit that performs arithmetic and bitwise operations on integer binary numbers” en.wikipedia.org. You can think of the ALU as a powerful calculator built into the chip. When a program says “add 2 + 2” or “compare these two values,” the ALU executes that operation.

Connecting the control unit and ALU are the registers – very small, very fast storage locations inside the CPU. Registers hold data that the CPU is actively using. For example, one register might hold the current number being processed, another might hold the address of the next instruction, and so on. A good analogy is scratch paper on your desk: instead of going back to the filing cabinet (main memory) every time, you write temporary results on the nearest notepad (register) because it’s much faster. Indeed, CPU registers are “high-speed memory units essential for efficient program execution,” storing frequently used values so the CPU can access them almost instantly geeksforgeeks.org. Registers are faster than any cache or RAM – they’re on-chip and often can be accessed in a single CPU cycle.

Other components inside the CPU (like the Instruction Register or Program Counter) help keep track of which instruction is being executed and where to find the next one in memory. Together, these parts – the control unit, ALU, and registers – form the core of the CPU’s internal data path and control logic.

_____________________________________________________________________

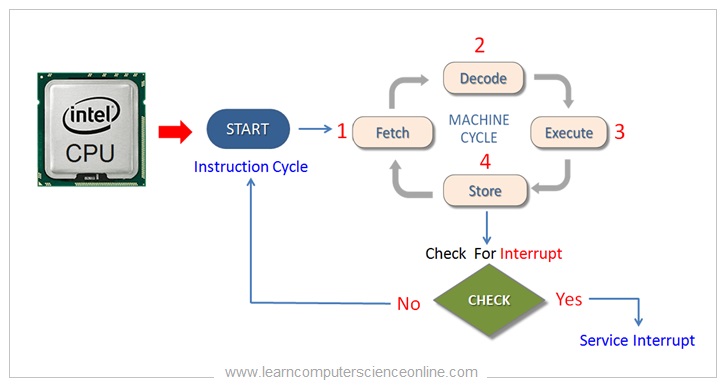

The Instruction Cycle: Fetch, Decode, Execute

So how does the CPU actually run a program? It follows a very regular instruction cycle (also called the fetch-decode-execute cycle). Each cycle has three main steps:

-

Fetch: The CPU retrieves (fetches) the next instruction from memory. It uses a special register called the Program Counter (PC) to know the address of the next instruction. The PC is like a bookmark in a recipe book – it tells the CPU where to look next. After fetching, the PC increments to point to the following instruction.

-

Decode: The CPU then decodes the fetched instruction – meaning it figures out what operation is required and which operands (data) are involved. This is where the control unit shines: it reads the instruction’s binary code and generates signals to route data to the right parts of the ALU or registers.

-

Execute: Finally, the ALU (or other functional unit) performs the operation. This might be an arithmetic calculation (like adding two numbers in registers), a logical test (compare values), or a data movement (load/store from memory). Once done, any results are written back to a register or memory.

Analogy: Think of the CPU like someone following a cookbook to bake cookies. Fetch is like reading the next step in the recipe. Decode is understanding that step (e.g. “add eggs and sugar together”). Execute is actually doing it (mixing the eggs and sugar). Then you read the next line, decode it (“stir in flour”), and so on. Each instruction guides the process step-by-step.

_____________________________________________________________________

Memory, Buses, and I/O: How the CPU Connects with the World

The CPU doesn’t operate in a vacuum. It interacts with memory and peripherals via buses. A system bus is like a highway of wires connecting the CPU to RAM (main memory), storage, and I/O devices (keyboard, disk, etc.). This includes an address bus (telling memory which location to access) and a data bus (carrying the actual bits to/from memory). For example, to read a value from RAM, the CPU puts the target address on the address bus, sets the control line to “read”, and then reads the data from the data bus when memory responds.

In practice, CPUs use specialized registers to communicate: the Memory Address Register (MAR) holds the address of the data the CPU wants, and the Memory Data Register (MDR) holds the data just read or to be written. The address bus is unidirectional (CPU → memory), while the data bus is bidirectional. In the words of a systems reference, “the address bus … conveys memory addresses exclusively from the CPU to memory modules. The CPU uses the address bus to convey the target address when commencing a read or write” geeksforgeeks.org. Then the data bus carries the retrieved data back to the CPU or sends data out to memory.

Think of memory as a large library of books (data). The CPU’s registers are your work desk where you keep the book you’re currently reading. The address bus is like giving the librarian a call number to fetch a particular book off the shelf, and the data bus is like carrying the pages back and forth. Because retrieving data from the library (RAM) is relatively slow, we use this hierarchy of storage to improve speed (more on that soon).

Peripherals (printers, drives, USB devices) also connect via the bus or via special controllers. The CPU can talk to them by sending signals on control lines or through interrupts. An interrupt is like a ring on the doorbell: if a device needs attention (like data arrived from the network), it signals the CPU, which can pause its current work, save the state, and run an interrupt handler routine.

Analogy: The buses are the CPU’s communication highways. Imagine the CPU as a city hall sending mail: the address bus is the postal address on an envelope, the data bus is the actual letter inside. It puts the address (memory location) on one lane, and the data on another lane.

_____________________________________________________________________

Speeding Up: Pipelining, Multicore, and Cache Hierarchies

Modern CPUs are far more complex than a single fetch-decode-execute loop. To boost performance, designers use techniques like pipelining, multiple cores, and cache hierarchies.

Pipelining: An Assembly Line for Instructions

Instead of completing one instruction entirely before starting the next, pipelining overlaps them. Think of an assembly line: while one instruction is being executed, another can be decoded, and yet another can be fetched, all at the same time but at different stages. This way, each clock tick completes part of multiple instructions, greatly increasing throughput.

As one source puts it, pipelining is

“like an assembly line for instructions. Each part of an instruction is worked on by a different part of the processor at the same time. This speeds up how quickly a sequence of instructions is completed.” medium.com. For example, in a simple 4-stage pipeline (Fetch → Decode → Execute → Writeback), at any moment four instructions can be in flight, each at a different step.Pipelining isn’t magic, though. It introduces hazards. For instance, if one instruction needs the result of a previous one, the pipeline might have to stall or use forwarding techniques. Branch instructions (if-then decisions) can also disrupt the flow unless the CPU predicts correctly. Despite these challenges, pipelining is a staple of modern CPU design and keeps the internal “workshop” busy.

_____________________________________________________________________

Multiple Cores: Many Brains in the Processor

Modern CPUs usually have multiple cores, meaning essentially multiple CPUs on one chip. Each core has its own ALU, registers, and (often) L1 cache, and can run instructions independently. In effect, this is like having several cooks in the kitchen, each with their own workspace. More cores mean the computer can handle truly parallel tasks.

For example, if you’re editing a video and browsing the web at the same time, different threads of each program can run on different cores simultaneously. A CPU with 4 or 8 cores can genuinely process 4 or 8 instruction streams in parallel. As one blog explains, “A CPU core is a physical processing unit … A CPU can have multiple cores, which allows it to run multiple tasks simultaneously. The more cores a CPU has, the more independent tasks the processor can run concurrently.” namehero.com.

Some CPUs take this further with hyper-threading (logical cores), but fundamentally, multicore design avoids the diminishing returns of simply cranking clock speed higher (which hits power and heat limits). Think of multicore as parallelizing work: if you have 4 people cooking (4 cores), you can prepare 4 dishes faster than one person making them one after the other.

_____________________________________________________________________

Cache Hierarchies: Keeping Data Close to the CPU

Because accessing main memory (RAM) is slower than the CPU, modern processors use cache memory – small amounts of very fast memory on or near the CPU – to store recently used data and instructions. Most CPUs have a hierarchy of caches: a tiny L1 cache (closest to the core, e.g. 32–128 KB), a larger but slower L2 cache (hundreds of KB to few MB), and often an even larger L3 cache shared between cores.

The idea is simple: when the CPU needs some data, it first checks L1 (fastest). If it’s not there (cache miss), it checks L2, then L3, before going to the much slower main memory. As one reference notes, “many computers use multiple levels of cache, with small fast caches backed up by larger, slower caches. Multi-level caches generally operate by checking the fastest (L1) first; if it hits, the processor proceeds at high speed. If that cache misses, the slower but larger L2 is checked, and so on” en.wikipedia.org.

This hierarchy capitalizes on the principles of locality: programs tend to use the same data/instructions repeatedly (temporal locality) or access data near each other in memory (spatial locality). Caches leverage this by keeping the “hot” data ready. You can imagine this like working at a desk: you keep the papers you need right in front of you (L1), extra reference material in a nearby drawer (L2), and rarely-used files in a filing cabinet across the office (main RAM). By the time you reach the cabinet, a lot of work has already been done via the quicker sources.

Modern CPUs may also use techniques like speculative execution and out-of-order execution (beyond our scope here) to further improve efficiency, but pipelining, multiple cores, and multi-level caches are the bread-and-butter.

_____________________________________________________________________

Putting It All Together:

All these pieces – the control unit, ALU, registers, caches, and cores – must work together seamlessly. The control unit orchestrates the fetch-decode-execute steps, the ALU performs the calculations, registers hold data for the ALU, and caches reduce waiting for memory. With pipelining and multiple cores, dozens or hundreds of instructions are being juggled in parallel at any instant.

Analogy Recap: Imagine a modern kitchen: the head chef (control unit) reads many recipes (instructions), while sous-chefs (ALUs) prepare ingredients (arithmetic/logic). Each cook has bowls of ingredients (registers) ready at their stations. There are multiple cooking stations (cores) so several dishes can be prepared at once. Quick snacks are kept on the counter (L1 cache) and bulk ingredients in the pantry (RAM). The chef keeps the process moving smoothly, pulling out ingredients and giving each cook orders just in time. This teamwork lets the kitchen serve a full menu fast.

Thanks to these innovations, CPUs today can run billions of instructions per second. From your smartphone to data center servers, understanding the CPU’s “inner life” helps us appreciate how complex tasks are broken down into simple steps.

_____________________________________________________________________

Learn More: Books, Kits, and Courses

If you’re curious to dive deeper (or start building projects of your own), here are some recommended resources and kits:

-

Books: Computer Organization and Design by Patterson & Hennessy https://amzn.to/4jZT2r2 – a classic textbook for learning CPU architecture. Another approachable read is Code: The Hidden Language of Computer Hardware and Software by Charles Petzold https://amzn.to/4d7gkcy, which explains hardware fundamentals in a story-like way.

-

Microcontrollers & Kits: Try a Raspberry Pi 4 Starter Kit https://amzn.to/4jMiwbZ to experiment with a real ARM-based computer . Or get an Arduino Uno Starter Kit https://amzn.to/4m1I0n0 to learn about digital logic and simple processing in hardware. Hands-on kits help reinforce how instructions control electronic circuits.

-

Online Courses: Check out Coursera’s Computer Architecture course (free to audit) https://www.coursera.org/learn/comparch to see video lectures on CPUs, pipelines, and more. Many universities offer open lectures on computer organization. Even an electronics course or Arduino tutorial can give insight into how software translates to hardware actions.

Whether you read, watch, or build, understanding the CPU is an exciting journey into how our digital world works under the hood.

:max_bytes(150000):strip_icc()/001_832453-5b9848d7c9e77c0050ec9b39.jpg)

Comments

Post a Comment